Object space

An Object space refers to a known set of features in the environment that is observed in our calibration data. This often takes the form of a fiducial marker, a calibration target, or similar mechanism. This is one of the main user inputs to MetriCal, along with the system's Plex and calibration dataset.

One of the most difficult problems in calibration is that of cross-modality data correlation. A camera is fundamentally 2D, while Lidar is 3D. How do we bridge the gap? By using the right object space! Many seasoned calibrators are familiar with the checkerboards and grids that are used for camera calibration; these are object spaces as well.

Object spaces as used by MetriCal help define the parameters needed to accurately and precisely detect and manipulate features seen across modalities in the environment. This section serves as a reference for the different kinds of object spaces, detectors, and features supported by MetriCal; and perhaps more importantly, how to combine these object spaces.

Diving Into The Unknown(s)

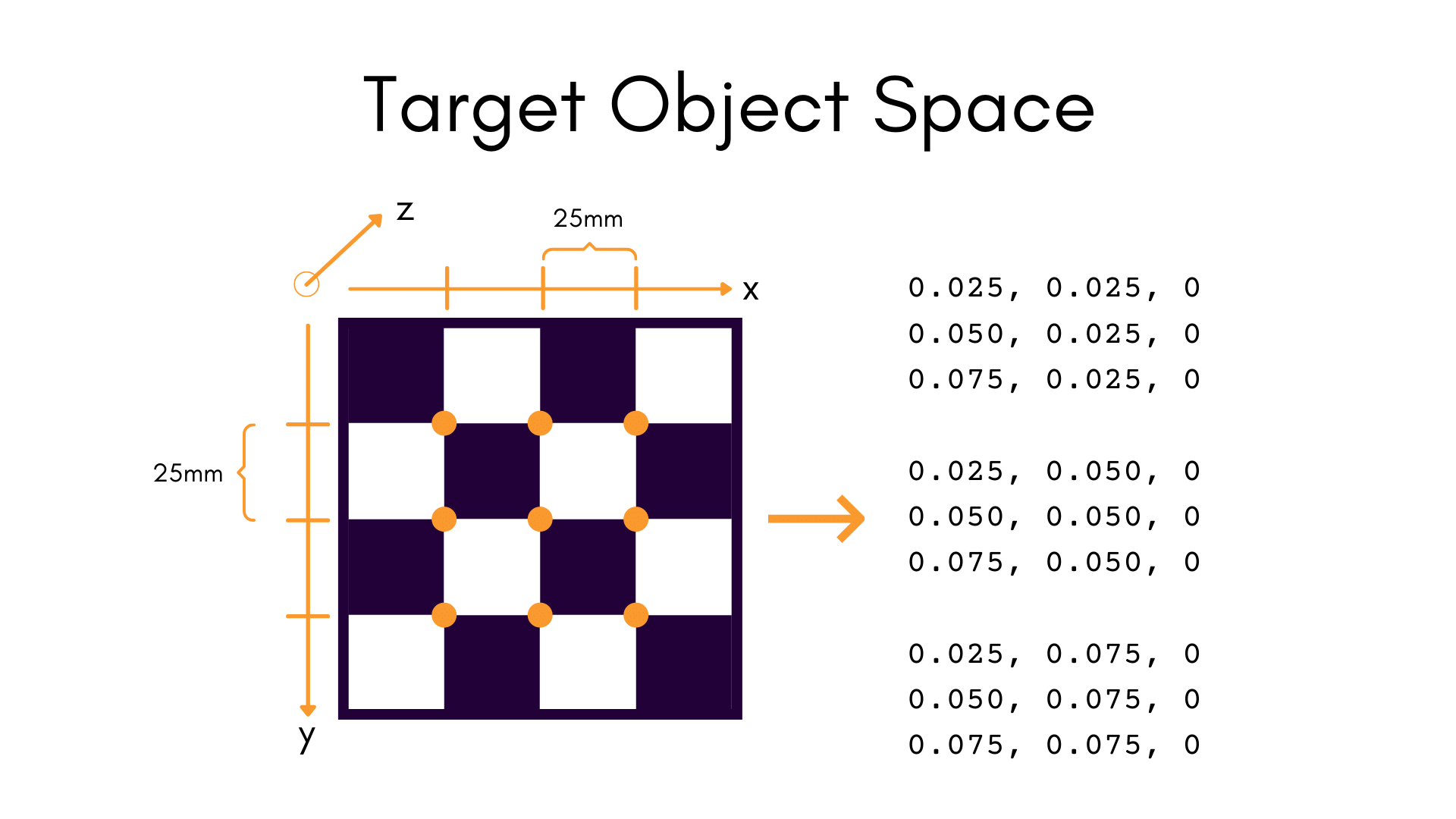

Calibration processes often use external sources of knowledge to learn values that a component (a camera, for instance) couldn't derive on its own. Continuing the example with cameras as our component of reference — there's no sense of scale in a photograph. A photo of a mountain could be larger than life, or it could be a diorama; the camera has no way of knowing, and the image unto itself has no way to communicate the metric scale of what it contains.

This is where object spaces come into play. If we place a target with known metric properties in the image, we now have a reference for metric space.

Most component types require some target field like this for proper calibration. For LiDAR we use the a circular target comprising a checkerboard and some retroreflective tape; Similarly, for cameras MetriCal supports a whole host of different checkerboards and signalized markers. Each of these fiducials is referred to as an object space.

Object spaces are Optimized

In MetriCal, even object space points have covariance! This reflects the imperfection of real life; even the sturdiest target can warp and bend, which will create uncertainty. We embed this possibility in the covariance value of each object space point. That way, you can have greater certainty of the results, even if your target is not the perfectly "flat" or idealized geometric abstraction that is often assumed in calibration software.

One of the most unique capabilities of MetriCal is the ability to optimize object space. With MetriCal, it is possible to calibrate with boards that are imperfect without inducing projective compensation errors back into your final results.

Multi-Target Calibrations? No Problem.

In addition to MetriCal's ability to optimize the object space, MetriCal can also optimize across multiple object spaces. By specifying multiple targets in a scene, it becomes possible to calibrate complex scenarios that wouldn't otherwise be feasible. For example, MetriCal can optimize an extrinsic between two cameras with zero overlap if multiple object spaces are used.

Serialization and Schema

Like our plex structure, object spaces are serialized as JSON objects or files and are passed into MetriCal for many of its different modes. We list an example JSON object below, in addition to a brief description of the keys and types of the object space JSON serialized format.

- Example JSON

- Field Descriptions

{

"object_spaces": {

"24e6df7b-b756-4b9c-a719-660d45d796bf": {

"descriptor": {

"variances": [0.0002, 0.0002, 0.0002]

},

"detector": {

"markerboard": {

"checker_length": 0.125,

"corner_height": 7,

"marker_dictionary": "Aruco4x4_1000",

"marker_id_offset": 0,

"marker_length": 0.097,

"corner_width": 7

}

}

},

"123cdf7b-b756-4b9c-a719-660d45d795ae": {

"descriptor": {

"variances": [0.0002, 0.0002, 0.0002]

},

"detector": {

"markerboard": {

"checker_length": 0.125,

"corner_height": 7,

"marker_dictionary": "Aruco4x4_1000",

"marker_id_offset": 30,

"marker_length": 0.097,

"corner_width": 7

}

}

},

"34e6df7b-b756-4b9c-a719-660d45d796bf": {

"descriptor": {

"variances": [1.0, 1.0, 1.0]

},

"detector": {

"circle": {

"radius": 0.60

}

}

}

},

"spatial_constraints": [],

"mutual_construction_groups": [

[

"24e6df7b-b756-4b9c-a719-660d45d796bf",

"34e6df7b-b756-4b9c-a719-660d45d796bf"

]

]

}

| Field | Type | Description |

|---|---|---|

object_spaces | A map of UUID to object space objects | The collection of object spaces (identified by UUID) to use. This comprises detector/descriptor pairs for each object space. |

spatial_constraints | An array of spatial constraint objects (between object spaces) | The spatial constraitns (extrinsics and covariances) between multiple object spaces, if any. |

mutual_construction_groups | A set of object space UUIDs | Describes sets of object spaces that exist as a mutual construction. This means that the object spaces are in fact part of the same board, or otherwise joined together in some meaningful way. At present, this only applies to our LiDAR circle target. |

The above example demonstrates a multi-object space setup with two ChArUco boards, each using the

same Aruco4x4_1000 dictionary, combined with our LiDAR circle object space.