LiDAR Data Capture

LiDAR data capture can be split into a few different categories, depending on how your system is configured. These are:

- LiDAR ↔ Camera calibrations

- LiDAR ↔ LiDAR calibrations

Depending on your system, one or all of the above categories may apply to you. We provide some tips and guidelines below for capturing LiDAR data (point clouds) for use in calibration.

Required Object Space

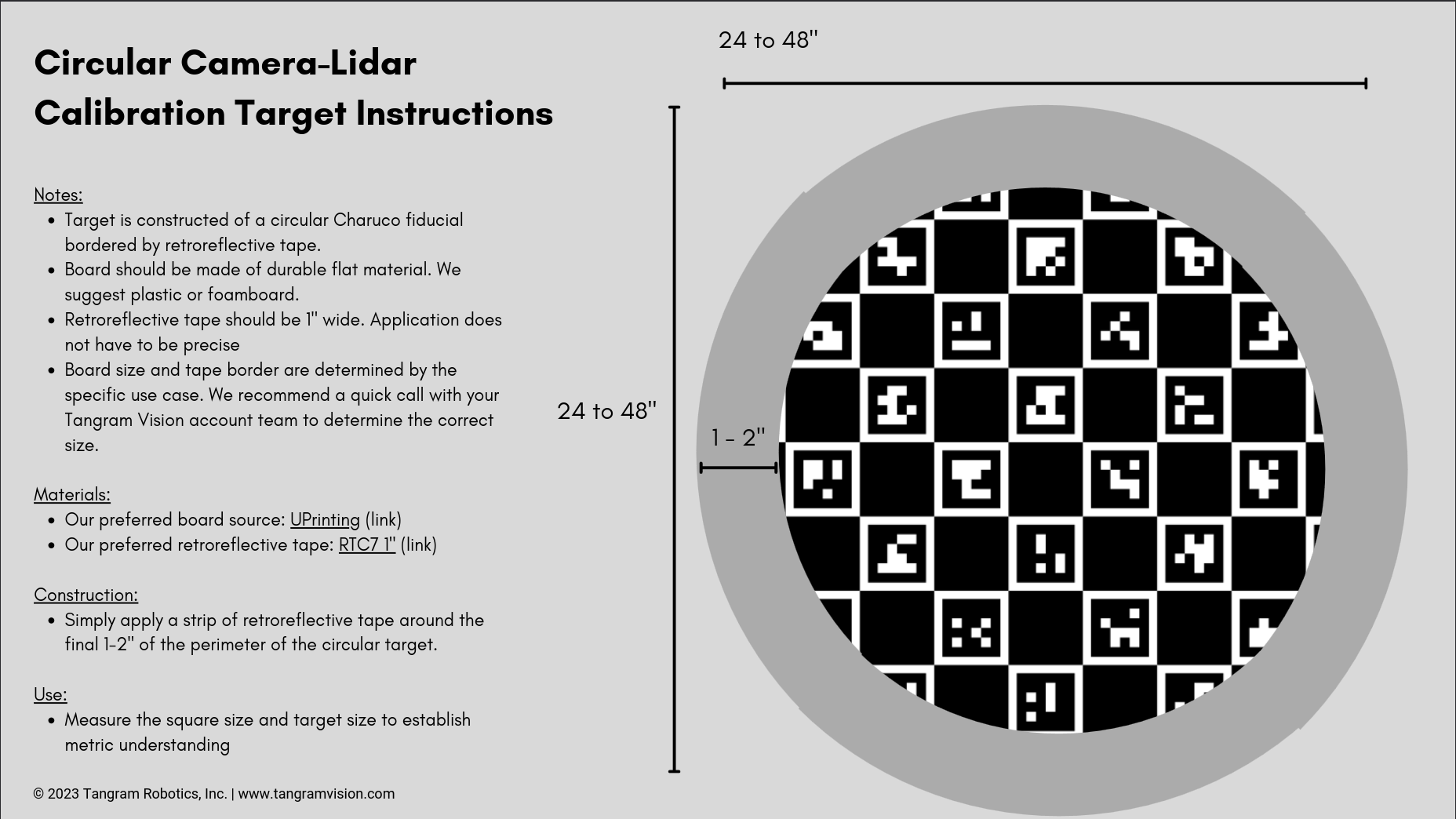

All of MetriCal's LiDAR calibrations require a custom target to be used as the object space. See the following chart for more information.

LiDAR ↔ Camera Calibration

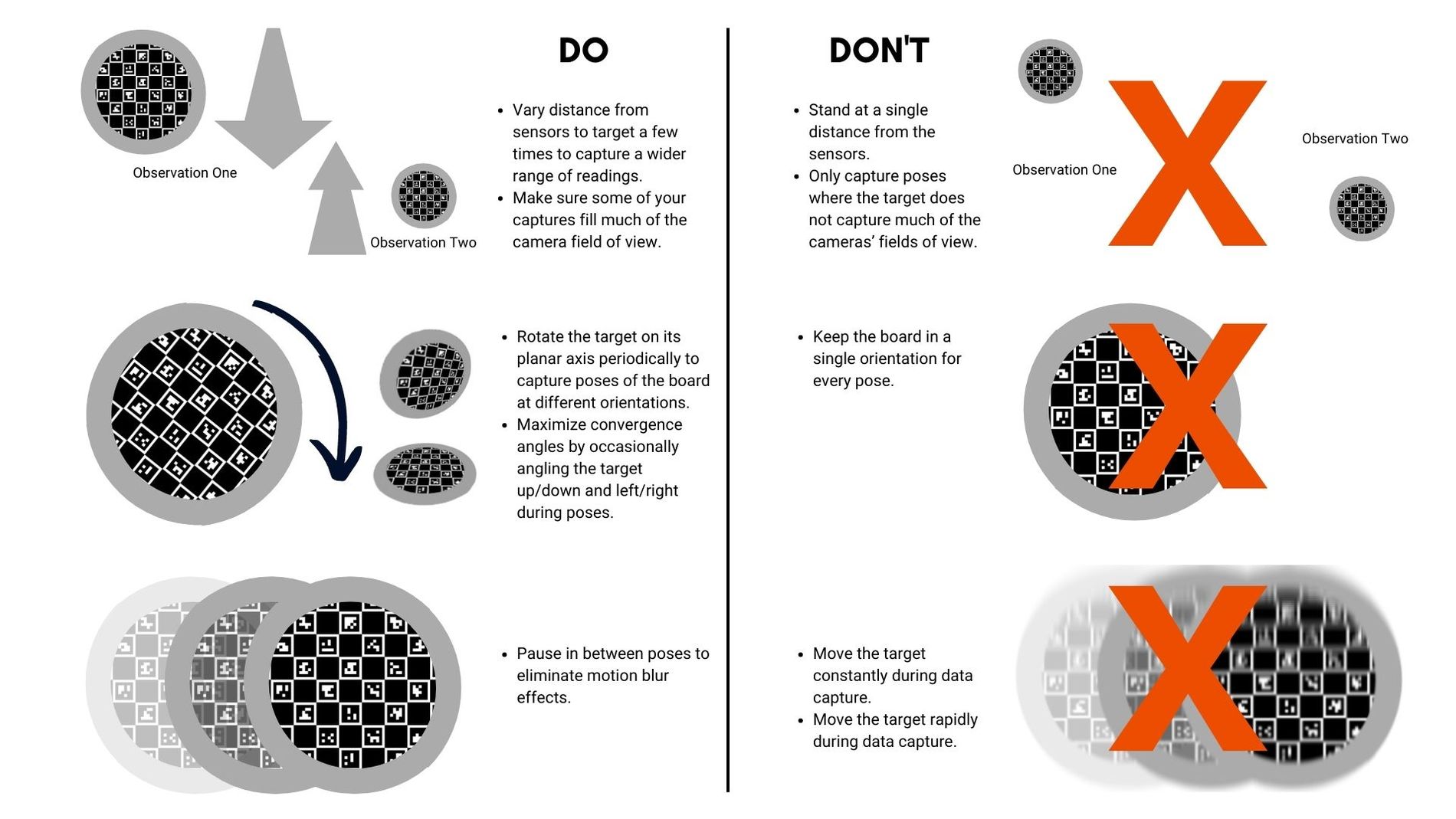

LiDAR ↔ Camera calibrations are currently an extrinsics-only calibration. Note that while we only solve for the extrinsics between the LiDAR and the camera components, the calibration is still a joint calibration as camera intrinsics are still estimated as well. See our below chart for some guidelines towards capturing LiDAR data when doing a LiDAR ↔ Camera calibration.

Incorporating Camera Intrinsics Calibration

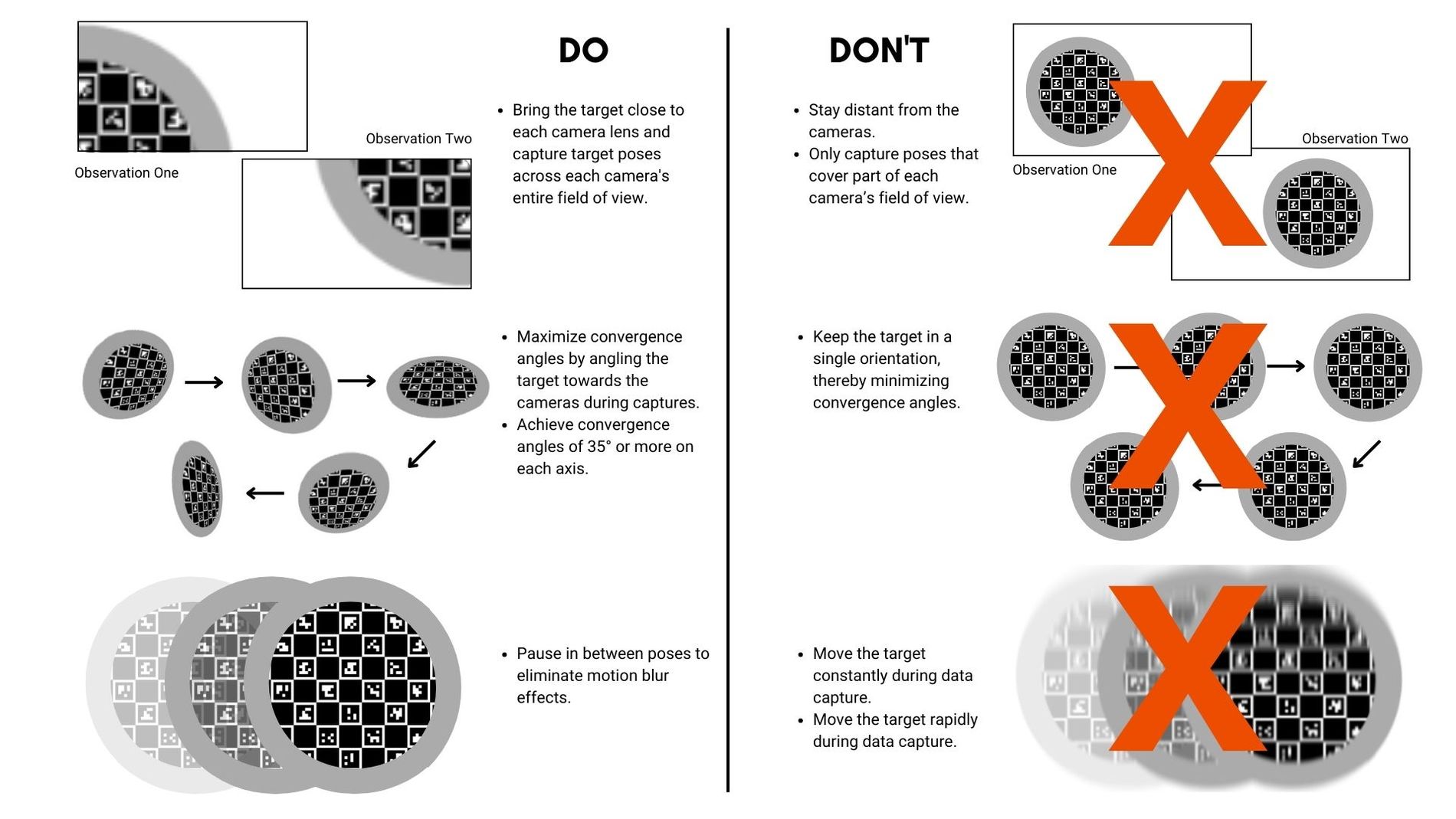

Additionally, see our following guide for incorporating camera intrinsics alongside the guidelines above.

For more information on producing good camera calibrations using the LiDAR circle target, see our camera data capture guide. The circular target used in LiDAR ↔ Camera calibrations is 100% compatible with camera-only calibrations and can be used similarly.

LiDAR ↔ LiDAR Calibration

Many of the tips for LiDAR ↔ LiDAR data capture are similar to Camera ↔ LiDAR capture, and vice-versa. Below we've tried to enumerate the best practices for capturing a dataset that will consistently produce a high-quality calibration between two LiDAR.

Maximize Variation Across All 6 Degrees Of Freedom

When capturing the circular target with multiple LiDAR components, ensure that the target can be seen from a variety of poses. In particular, this means varying:

- The roll rotation of the target relative to the LiDAR

- The pitch rotation of the target relative to the LiDAR

- The yaw rotation of the target relative to the LiDAR

- X, Y, and Z translations between poses

With regards to that last point above, MetriCal performs LiDAR-based calibrations best when the target can be seen from a range of different depths. While it is still necessary to keep the target visible across as many sensors as possible, try moving forward and away from the target in addition to moving laterally relative to the LiDAR itself.

This advice essentially boils down to filling the fields of view of the LiDAR components being calibrated. This advice is similar to filling the field of view of a camera, except that the field of view of a LiDAR component is often much greater.

Occasionally Pause Between Different Poses

MetriCal performs more consistently if there is less motion blur or motion-based artefacts in the captured data. For the best calibraitons, pause for 1-2 seconds after every pose of the board. Constant motion in a dataset will typically yield poor results.

Unlike when working with camera datasets, LiDAR ↔ LiDAR calibrations do not yet have any kind of motion filtering in MetriCal. This means that the target can sometimes be mis-detected when large motion artefacts are present, leading to worse calibrations.

To avoid this, one can do one or several of the following:

- Flip the target such that the retroreflective tape is no longer visible during motion.

- Reduce the overall speed of motion between poses.

- Only record data at fixed positions when there is no motion present. This could be done by manually filtering PCD files (if using our folder data format), or pausing message recording in ROS during motion.