Camera ↔ IMU Calibration Guide

Unlike other modalities supported by MetriCal, IMU calibrations do not require any specific object space or target, as these types of components (accelerometers and gyroscopes) measure forces and rotational velocities directly. Do note that for a successful IMU calibration, MetriCal will requires that your IMU is streaming at a minimum of 100Hz, ideally at 200Hz streams or higher. You are welcome to calibrate with a higher IMU rate than you might consume during normal operation, which should not affect how applicable the calibration is during actual runtime.

MetriCal currently does not perform well with IMU ↔ camera calibrations if the camera is a rolling shutter camera. We advise calibrating with a global shutter camera whenever possible.

Example Dataset and Manifest

We've captured an example of a good IMU ↔ Camera calibration dataset that you can use to test out MetriCal. If it's your first time performing an IMU calibration using MetriCal, it might be worth running through this dataset once just so that you can get a sense of what good data capture looks like.

This dataset features:

- Observations as an MCAP

- Two infrared cameras

- One color camera

- One IMU

- Three AprilGrid-style targets arranged in a box formation

The Manifest

[project]

name = "MetriCal Demo: Camera IMU Pipeline"

version = "15.0.0"

description = "Pipeline for running MetriCal on a camera-imu dataset, with 3 boards forming a box."

workspace = "metrical-results"

## === VARIABLES ===

[project.variables.dataset]

description = "Path to the input MCAP dataset."

value = "camera_imu_box.mcap"

[project.variables.object-space]

description = "Path to the input object space JSON file."

value = "camera_imu_box_objects.json"

## === STAGES ===

[stages.first-stage]

command = "init"

dataset = "{{variables.dataset}}"

reference-source = []

topic-to-model = [

["*infra*", "opencv-radtan"],

["*color*", "opencv-radtan"],

["*imu*", "scale-shear"]

]

... # ...more options...

initialized-plex = "{{auto}}"

[stages.second-stage]

command = "calibrate"

dataset = "{{variables.dataset}}"

input-plex = "{{first-stage.initialized-plex}}"

input-object-space = "{{variables.object-space}}"

camera-motion-threshold = "lenient"

render = true

... # ...more options...

detections = "{{auto}}"

results = "{{auto}}"

Let's take a look at some of the important details of this manifest:

- Our first stage, the Init command, is assigning all topics that match the

*infra*pattern to theopencv-radtanmodel. Similar glob matching is used for color cameras and IMU topics. This is a convenient way to assign models to topics without needing to know the exact topic names ahead of time. Don't worry: MetriCal will yell at you if you have conflicting topics that match the same pattern. - Our second stage, the Calibrate command, has a

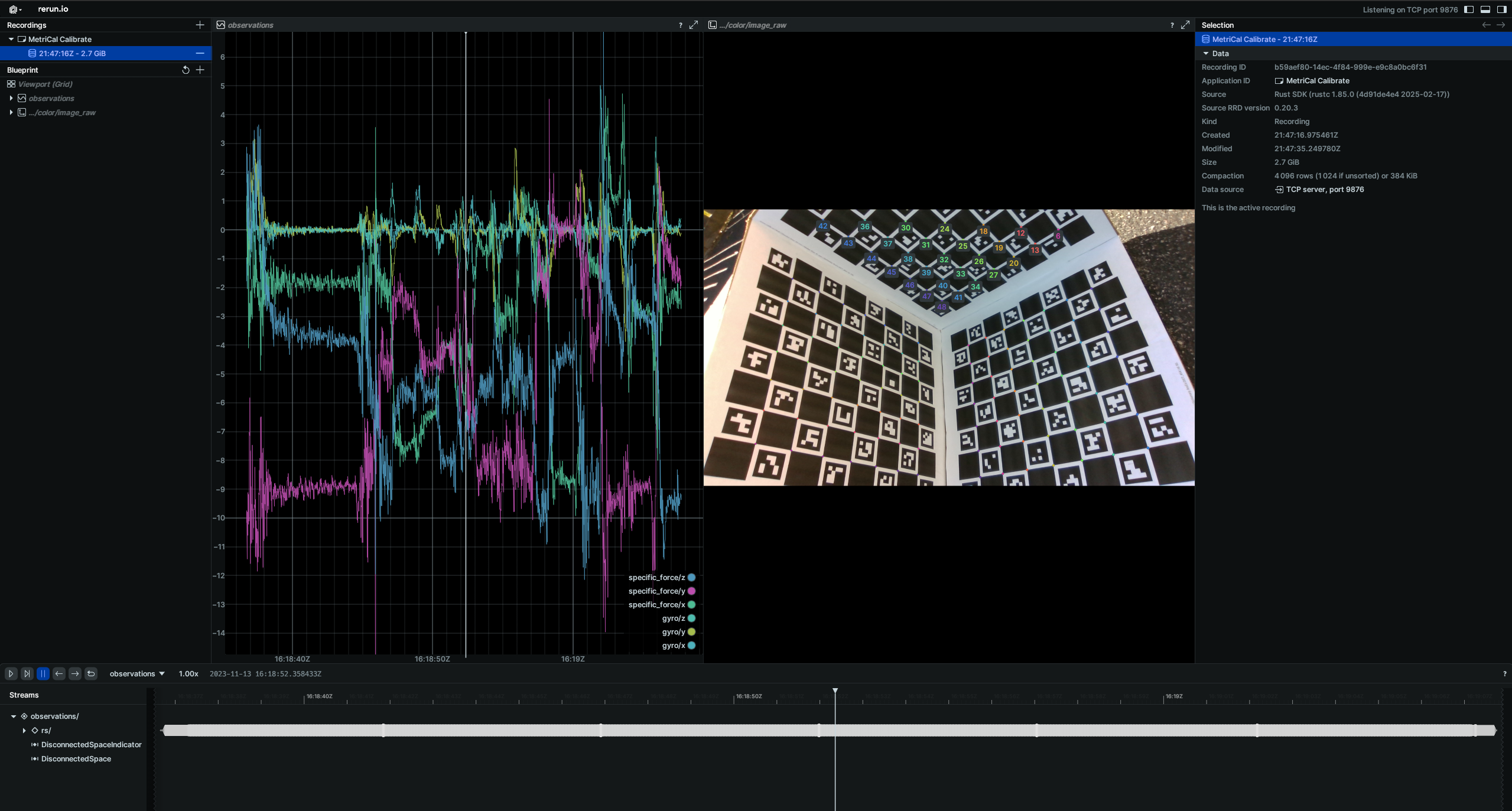

lenientcamera motion threshold. IMU calibration necessarily needs motion to excite all axes during capture, so it wouldn't be a great idea to filter out all of the motion in the dataset. - Our second stage is rendered! This flag will allow us to watch the detection phase of the calibration as it happens in real time. This can have a large impact on performance, but is invaluable for debugging data quality issues.

MetriCal depends on Rerun for all of its rendering. As such, you'll need a specific version of Rerun

installed on your machine to use the --render flag. Please ensure that you've followed the

visualization configuration instructions before running this

manifest.

Running the Manifest

With a copy of the dataset downloaded and the manifest file created, you should be ready to roll:

metrical run camera_imu_box_manifest.toml

While the calibration is running, take specific note of the frequency and magnitude of the sensor motion, as well as the still periods following periods of motion. When it comes time to capturing your own data, try to replicate these motion patterns to the best of your ability.

When the run finishes, you'll be left with three artifacts:

initialized-plex.json: Our initialized plex from the first stage.report.html: a human-readable summary of the calibration run. Everything in the report is also logged to your console in realtime during the calibration. You can learn more about interpreting the report here.results.mcap: a file containing the final calibration and various other metrics. You can learn more about results here and about manipulating your results usingshapecommands here.

And that's it! Hopefully this trial run will have given you a better understanding of how to capture your own IMU ↔ Camera calibration.

Data Capture Guidelines

Maximize View of Object Spaces for Duration of Capture

IMU calibrations are structured such that MetriCal jointly solves for the first order gyroscope and accelerometer biases in addition to solving for the relative IMU-from-camera extrinsic. This is done by comparing the world pose (or world extrinsic) of the camera between frames to the expected motion that is measured by the accelerometer and gyroscope of the IMU.

Because of how this problem is posed, the best way to produce consistent, precise IMU ↔ camera calibrations is to maximize the visibility of one or more targets in the object space from one of the cameras being calibrated alongside the IMU. Put in a different way: avoid capturing sections of data where the IMU is recording but where no object space or target can be seen from any camera. Doing so can lead to misleading bias estimates.

Excite All IMU Axes During Capture

IMU calibrations are no different than any other modality in how they are entirely a data-driven process. In particular, the underlying data needs to demonstrate observed translational and rotational motions in order for MetriCal to understand the motion path that the IMU has followed.

This is what is meant by "exciting" an IMU: accelerations and rotational velocities must be observable in the data (different enough from the underlying noise in the measurements) so as to be separately observable from e.g. the biases. This means that when capturing data to calibrate between an IMU and one or more cameras, it is important to move the IMU translationally across all accelerometer axes, and rotationally about all gyroscope axes. This motion can be repetitive so long as a sufficient magnitude of motion has been achieved. It is difficult to describe what that magnitude is as that magnitude is dependent on what kind of IMU is being calibrated (e.g. MEMS IMU, how large it is, what kinds of noise it measures, etc.).

We suggest alternating between periods of "excitement" or motion with the IMU and holding still so that the camera(s) can accurately and precisely measure the given object space.

If you find yourself still having trouble getting a sufficient number of observations to produce

reliable calibrations, we suggest bumping up the threshold for our motion filter heuristic

when calling metrical calibrate. This is controlled by the --camera-motion-threshold

flag. A value of 3.0 through 5.0 can sometimes improve the quality of the calibration a

significant amount.

Reduce Motion Blur in Camera Images

This advice holds for both multi-camera and IMU ↔ camera calibrations. It is often advisable to reduce the effects of motion in the images to produce more crisp, detailed images to calibrate against. Some ways to do this are to:

- Always use a global shutter camera

- Reduce the overall exposure time of the camera

- Avoid over-exciting IMU motion, and don't be scared to slow down a little if you find you can't detect the object space much if at all.

Troubleshooting

If you encounter errors during calibration, please refer to our Errors and Troubleshooting documentation.

Remember that all measurements for your targets should be in meters, and you should ensure visibility of as much of the target as possible when collecting data.