LiDAR ↔ LiDAR Calibration Guide

LiDAR ↔ LiDAR calibration is purely extrinsic. This means that it suffers from all of the same maladies that IMU and Local Navigation Systems do: without constraining every degree of freedom, calibration will be difficult.

Luckily, we can get this done with a simple Circle detector. This is just a circle of retroreflective tape on a flat surface. That's it! In most MetriCal setups, this circle is often taped onto another target type for multi-purpose use, like a ChArUco board or AprilGrid. However, there's no requirement to do so if you're just calibrating LiDARs.

Example Dataset and Manifest

We've captured an example of a good LiDAR ↔ LiDAR calibration dataset that you can use to test out MetriCal. If it's your first time performing a LiDAR calibration using MetriCal, it might be worth running through this dataset once just so that you can get a sense of what good data capture looks like.

This dataset features:

- Observations as an MCAP

- Two LiDAR sensors

- One circle target

The Manifest

[project]

name = "MetriCal Demo: LiDAR-LiDAR Manifest"

version = "15.0.0"

description = "Manifest for running MetriCal on a dataset with multiple LiDAR."

workspace = "metrical-results"

## === VARIABLES ===

[project.variables.dataset]

description = "Path to the input dataset containing calibration data."

value = "lidar_lidar_va.mcap"

[project.variables.object-space]

description = "Path to the input object space JSON file."

value = "lidar_lidar_va_objects.json"

## === STAGES ===

[stages.lidar-init]

command = "init"

dataset = "{{variables.dataset}}"

reference-source = []

topic-to-model = [["/livox/lidar", "lidar"], ["/velodyne_points", "lidar"]]

remap-reference-component = []

overwrite-strategy = "replace"

uuid-strategy = "inherit-reference"

initialized-plex = "{{auto}}"

[stages.lidar-calibrate]

command = "calibrate"

dataset = "{{variables.dataset}}"

input-plex = "{{lidar-init.initialized-plex}}"

input-object-space = "{{variables.object-space}}"

optimization-profile = "standard"

lidar-motion-threshold = "strict"

preserve-input-constraints = false

disable-ore-inference = false

overwrite-detections = false

override-diagnostics = false

render = true

detections = "{{auto}}"

results = "{{auto}}"

Note that our second stage is rendered. This flag will allow us to watch the detection phase of the calibration as it happens in real time. This can have a large impact on performance, but is invaluable for debugging data quality issues.

MetriCal depends on Rerun for all of its rendering. As such, you'll need a specific version of Rerun

installed on your machine to use the --render flag. Please ensure that you've followed the

visualization configuration instructions before running this

manifest.

Running the Manifest

With a copy of the dataset downloaded and the manifest file created, you should be ready to roll:

metrical run lidar_lidar_manifest.toml

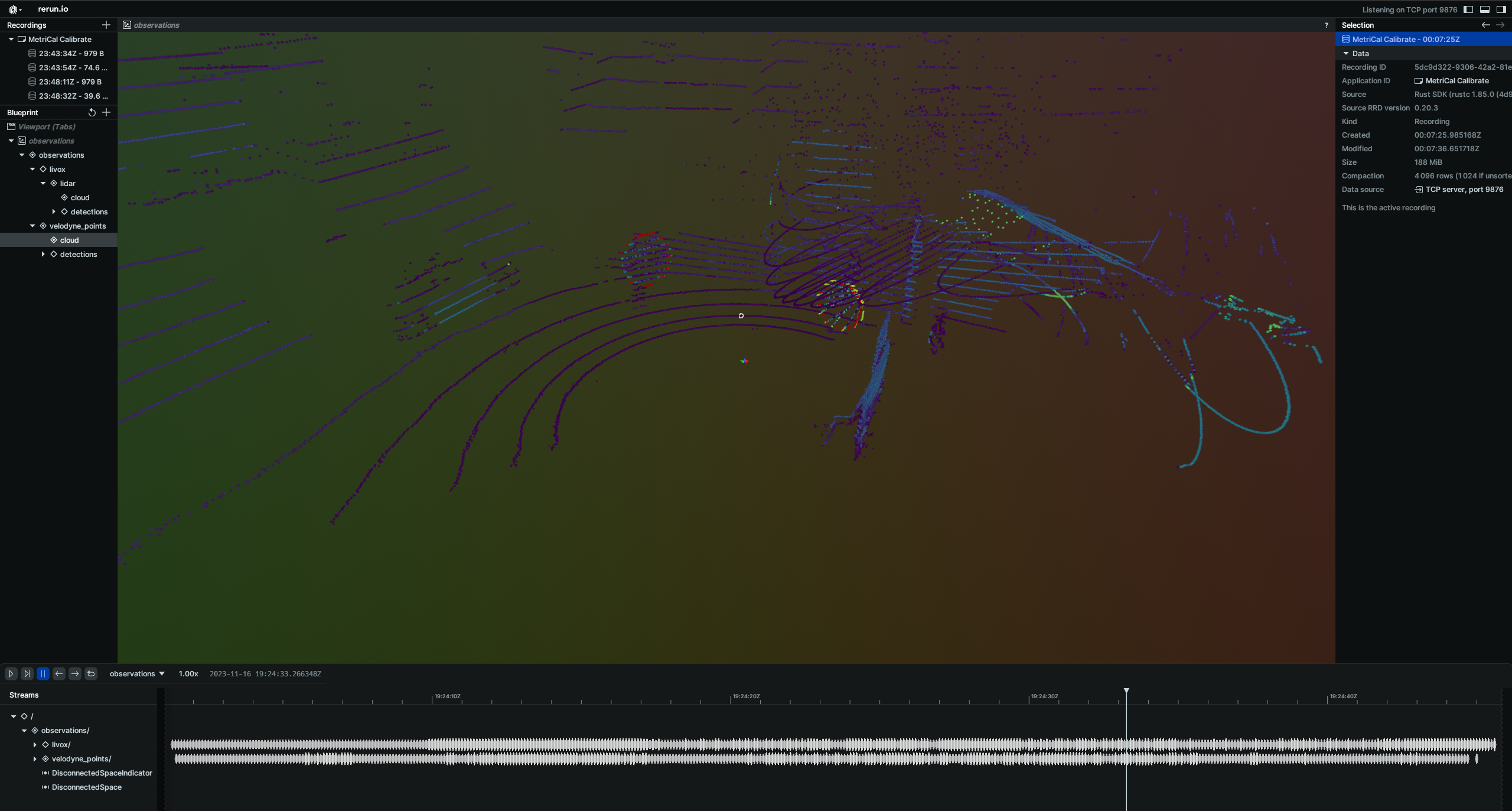

When you start it, it will display a visualization window like the following with two LiDAR point clouds in the same coordinate frame (but not registered to one another yet). Note that the LiDAR circle detections will show up as red points in the point cloud.

While the calibration is running, take specific note of the target motion patterns, presence of still periods, and breadth of coverage. When it comes time to design a motion sequence for your own systems, try to apply any learnings you take from watching this capture.

When the run finishes, you'll be left with three artifacts:

initialized-plex.json: Our initialized plex from the first stage.report.html: a human-readable summary of the calibration run. Everything in the report is also logged to your console in realtime during the calibration. You can learn more about interpreting the report here.results.mcap: a file containing the final calibration and various other metrics. You can learn more about results here and about manipulating your results usingshapecommands here.

Data Capture Guidelines

Many of the tips for LiDAR ↔ LiDAR data capture are similar to Camera ↔ LiDAR capture. Below we've outlined best practices for capturing a dataset that will consistently produce a high-quality calibration between two LiDAR components.

Best Practices

| DO | DON'T |

|---|---|

| ✅ Vary distance from sensors to target to capture a wider range of readings. | ❌ Stand at a single distance from the sensors. |

| ✅ Ensure good point density on the target for all LiDAR sensors. | ❌ Only capture poses where the target does not have sufficient point density. |

| ✅ Rotate the target on its planar axis to capture poses at different orientations. | ❌ Keep the board in a single orientation for every pose. |

| ✅ Maximize convergence angles by angling the target up/down and left/right during poses. | ❌ Keep the target in a single orientation, minimizing convergence angles. |

| ✅ Pause in between poses (1-2 seconds) to eliminate motion artifacts. | ❌ Move the target constantly or rapidly during data capture. |

| ✅ Achieve convergence angles of 35° or more on each axis when possible. |

Maximize Variation Across All 6 Degrees Of Freedom

When capturing the circular target with multiple LiDAR components, ensure that the target can be seen from a variety of poses by varying:

- Roll, pitch, and yaw rotations of the target relative to the LiDAR sensors

- X, Y, and Z translations between poses

MetriCal performs LiDAR-based calibrations best when the target can be seen from a range of different depths. Try moving forward and away from the target in addition to moving laterally relative to the sensors.

The field of view of a LiDAR component is often much greater than a camera, so be sure to capture data that fills this larger field of view.

Troubleshooting

If you encounter errors during calibration, please refer to our Errors and Troubleshooting documentation.

Remember that all measurements for your targets should be in meters, and you should ensure visibility of as much of the target as possible when collecting data.